4D Workflows

4D Workflows

Most of the work that needs to be done to standardize 4D workflows has already been done in proprietary workflows for visual effects and live events. The company that has been around the longest and is perhaps the best example of this is 4DViews, a spinoff from INRIA based in Grenoble, France that Digital Air helped establish in 2006 - 2007 by facilitating INRIA's first collaboration on a major Hollywood film, Tony Scott's Déjà Vu.

Visual Effects

The music video below for Chelmico was made with 4D assets recorded by 4DViews' Japanese partner company, Crescent in Tokyo, using 4DViews' proprietary Holosys 4D capture and process technology -- the product of which are .obj sequences (time sequences of discrete meshes and textures). It's a fantastic example of 4D visual effects that reveals both how the effects are achieved and what is possible, from 3D transforms to time and space offsets to instancing, scaling, retexturing, and relighting --

Visual effects for 2D is where almost all 3D workflows originate. 3D workflows can be designed from scratch and implemented once for the 2D render. There is no need to automate the workflow itself beyond the project-specific requirements because the next project will have different requirements. This makes visual effects a playground for developing 3D workflows -- but not necessarily robust ones.

That said, 4DViews proprietary Holosys 4D capture workflow is robust, fast, well defined, and is available from 4DViews' partner studios around the world:

https://www.4dviews.com/volumetric-studios

Visual effects in cinema are often subtle to the point of being invisible. For each film in which visual effects are showcased there are hundreds in which their purpose is to advance the narrative while drawing as little attention to the illusion itself as possible. As 4D assets increase in quality their use in the latter type of visual effects production will also increase. Rendering-issues specific to 4D, such as hair, can be touched up before the final shots are rendered. Robust workflows are a must for live production. The example below, a live avatar performance by the French musician Woodkid on ZDF television in Germany, demonstrates 4DViews' ability to encode 4D content for live production. ZDF prebuilt the live broadcast project in Epic's Unreal Engine with virtual camera movements synchronized to real camera movements and virtual lighting synchronized to live lighting. 4DViews recorded the artist in their studio and delivered the 4D assets to ZDF. An impressive and complicated live 4D workflow by 4DViews and ZDF.

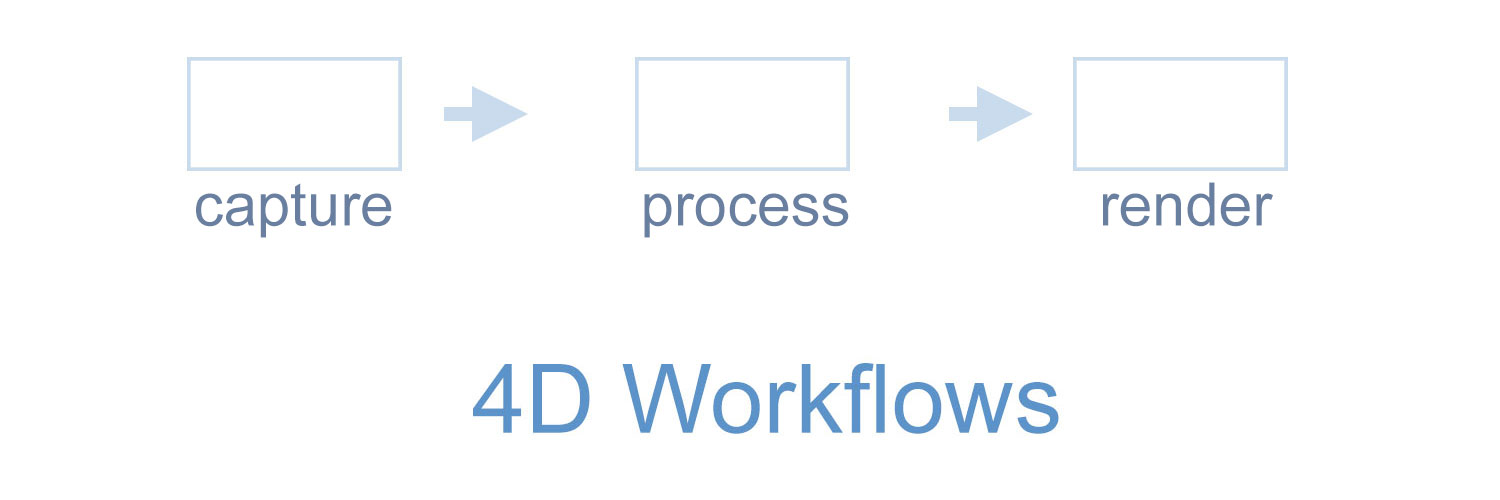

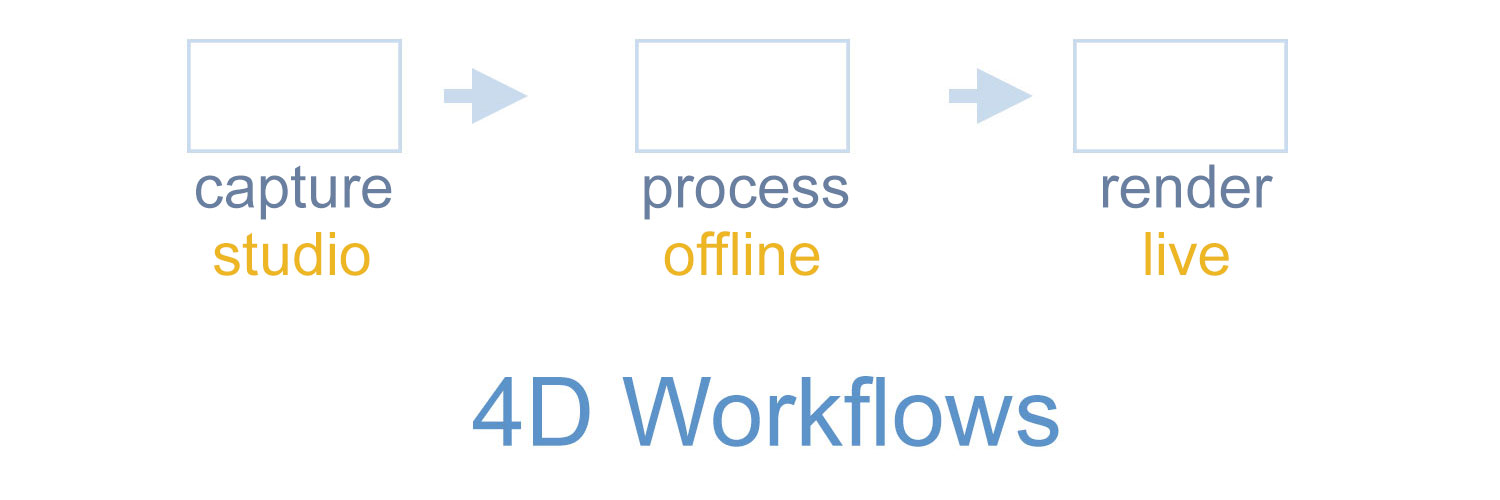

Below are links to a number of products and services that currently support multi-vendor 4D workflows. If you know of others that we should include or if you have your own and would like us to include it feel free to let us know. They're listed in order so you can scroll through the list and see how they fit together. We're working on another blog post that will document in detail a completely free and open-source 4D workflow from image capture to rendering in VFX or a graphics engine to be released with our rights-free datasets. A summary of that workflow is: capture (raw dataset) --> Meshroom (produce photogrammetric .obj sequence) --> Blender (edit, compress, convert .obj to alembic cache and render for VFX) --> WebXR, Unreal, Unity (publish for live or XR). A free and open-source workflow will enable researchers and companies without extensive financial resources to run the entire 4D workflow themselves and automate as much of it as they'd like, allowing them to innovate on whatever aspect of it they are interested in including training and testing machine learning tools. Moreover, using our rights-free datasets they can own any proprietary software that they produce doing so. This may seem given, but it's not. Commercial datasets may include limited use, non-compete, non-commercial only use, or even broad IP license-back provisions. By contrast, our approach follows directly from the spirit of the Epic MegaGrants program that partially funded the datasets: to insure that small innovative companies have equal opportunity and incentive to innovate and to profit from those innovations. You, your company, or your university should be able to contribute any useful steps that you develop, with as much focus on details or breadth across a complete workflow as you're interested in. And by participating in open standards you should also be able to package and sell (or release as open-source, or both) any conformant software that you create that enhances standard 4D workflows at whatever step you're interested in -- including end-to-end 4D workflows. That's the spirit with which I took the idea of creating open datasets to Epic Games, and that's the reason that we're producing the datasets. SparkAR, ARKit, ARCore, and Lens Studio are not included above because they are platform-specific proprietary and they are positioned at the delivery-end of 4D workflows. Of course all platforms, including proprietary platforms, are important for monetization and advancing the art. We're working on separate blog posts about each of the above detailing how they each fit into 4D VFX and other 4D workflows.

See 4DViews' youtube channel for examples of pose adjustment and other subtle effects now possible with their 4DFX editing software: https://www.youtube.com/watch?v=XYzZv_S8-i8

Live Production

Detailed Multi-vendor Workflows

Free and Open-Source Workflows for Researchers

Photogrammetry

Editing

Autorigging

Compression

Rendering

Animation